Transfer Learning It’s no secret that artificial intelligence (AI) aims to teach machines to think and act as humans do. That may seem like an impossible task, but we’ve seen remarkable achievements in the field. The primitive human act of communication, for instance, has been taught to machines in an area called “conversational AI.

And would you believe that programs can detect a person’s emotions and respond accordingly? Experts are studying artificial emotional intelligence extensively in what we know as “affective computing.”

Given the variety of fields that AI touches, it’s no surprise that experts are also exploring how humans learn. Transfer learning is an example, and in this post, we’re going to know what transfer learning is and how it works.

Table of Contents

What Strategies Are Transfer Learning Techniques Based?

Transfer Learning is based on a simple idea: re-exploiting the knowledge acquired by other configurations (sources) to solve a particular problem (objectives). In this context, we can distinguish different approaches is to be transferred and when and how the transfer is carried out. In general terms, we can distinguish

Three Types Of Transfer Learning:

Learning by inductive transfer or “inductive transfer learning.”

In this approach, the source and target fields are the same (same data), but the source and target tasks are different but similar. The idea is then to use the existing models to advantageously reduce the field of application of the possible models (model bias), as illustrated in the following figure:

For example, it is possible to use a model trained to detect animals in images to build a model capable of identifying dogs.

Unsupervised Transfer Learning, or “Unsupervised Transfer Learning”

As in the case of inductive transfer learning, the source and target fields are similar. Although the tasks are different. However, the data in both areas are not labelled.

It is generally easier to obtain large amounts of unlabeled data from databases and web sources than label data. Why using unsupervised learning combine with Transfer Learning has generate a lot of interest.

For example, Self-taught clustering is a method that allows small collections of unable target data to be clusters with the help of a large amount of unlabeled source data. This technique has proven to be more effective than the traditionally use cutting-edge methods, in which the target data is not labelled appropriately.

Learning by trans adductive transfer or “Trans adductive Transfer Learning”:

In this method. The source and target tasks are similar. But their corresponding fields are different in terms of data or marginal probability distributions.

For example, NLP models, such as the Part-Of-Speech Tagger (POS Tagger) use for morphosyntactic word tagging, are generally train and test on current data such as the Wall Street Journal. They can be adapted to data extract from social networks. Whose content is different but similar to that of newspapers.

What is Transfer Learning?

Transfer learning is an AI technique where the knowledge gain by machine learning (ML) models in solving one problem gets apply to another.

Humans find transfer learning easy. We automatically apply what we learned in previous experiences when facing a new task. Consider this: Do you recall being told that learning how to ride a bicycle piece of cake once you know how to ride a motorcycle would be a piece of cake?

In task 1 (learning to ride a bike), you know how to balance, and once you understand that, you don’t have to start from scratch when you do task 2 (learning to ride a motorbike). That’s because of transfer learning. Which is innate in humans. On the other hand, machines need to be taught to learn the same way.

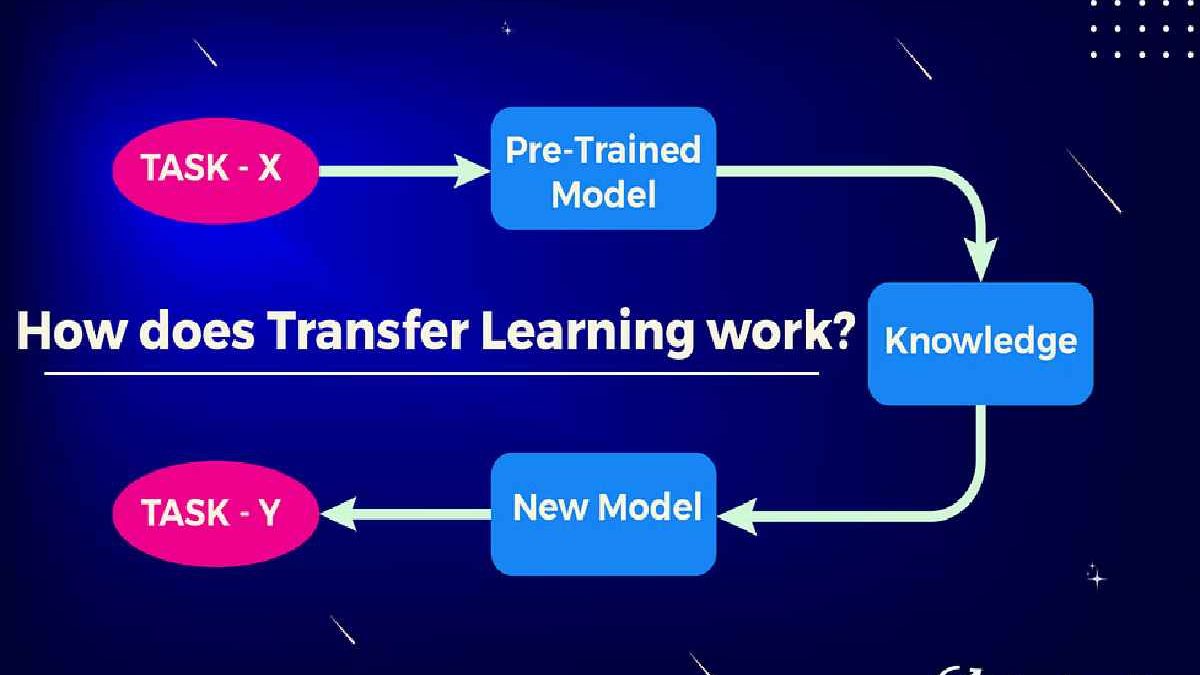

How does Transfer Learning Work?

If you train a machine to detect the presence of people in images, you can use that knowledge to make it recognize who the person in the picture is. Take note that both tasks make use of the same input—images.

They have different but relate outputs. Task 1 aims to detect the presence of people in images. Task 2, meanwhile. Requires identifying the people in the photos. Much like how the tagging feature in social media platforms works.

The machine’s knowledge from task 1 can help accomplish task 2. Making it easier and more cost-effective. Since it already knows how to detect people in images. You don’t have to start from scratch to teach it to do task 2.

What Are Some Applications of Transfer Learning?

You learn how transfer learning helps home insurance companies in the video above. The learning technique can also work in other sectors, such as:

Image Categorization

As the previous section illustrates. It helps detect and recognize objects in images. As a result, it makes image categorization a lot easier and faster. You no longer have to train a model to classify images since it’s already pre-train.

The process is beneficial in several sectors, most notably in the medical field. Medical imaging apps no longer have to be train from scratch and can immediately detect abnormalities such as kidney stones in scans.

Gaming

The application of AI in gaming has been explore as early as the 1990s. Different machines have defeated human game masters. We’ve seen IBM’s Deep Blue for chess, Chinook for checkers, and AlphaGo for the classic board game “Go.” These inventions, however, would not be able to win other matches. For example, if you use DeepMind’s AlphaGo to play chess, it would fail because it uses the traditional learning method.

With the help of it though, Deep mind develop another system that can use the strategies it learn from playing “Go” to play a different game.

Conclusion

Although machines can’t fully replicate the way humans learn, mimicking even a portion of it can still change the AI landscape. Developers can reuse ML models in relate tasks or problems instead of starting from scratch. Given enough data sets . It can cut down the costs and time of developing intelligent machines.